The cloud isn't evil - it's just unnecessary for most businesses. Modern tools have changed the game.

The Cloud Illusion

For over a decade, companies have blindly migrated to AWS, Azure, and Google Cloud, treating them as the holy grail of IT infrastructure. But after managing enterprise-grade systems for years, I've discovered a hard truth: the cloud is often a costly crutch. Unless you're operating at hyperscale (think Netflix), on-premise solutions with modern tools deliver superior performance, cost efficiency, and control.

The Only Cloud Expense We Recommend

Need a public IP? Rent a single cloud instance ($300/month max) as a WireGuard VPN gateway. That's it. No bloated subscriptions, no vendor lock-in.

The Modern On-Premise Stack

1. Rust: The Power of Safe Concurrency and Smart Synchronization

Rust stands apart for its fearless concurrency — empowering developers to build multi-threaded and asynchronous systems that are both safe and highly efficient. Unlike traditional languages that depend on runtime checks or garbage collection, Rust enforces safety at compile time through its ownership model and borrow checker. This design eliminates data races, deadlocks, and memory leaks before deployment — making Rust ideal for high-performance backend systems.

-

Thread-Safe by Design: Rust’s compiler prevents unsafe shared-state access across threads.

Async and Sync Harmony: Rust’s

async system blends smoothly with synchronization tools like Arc and Mutex, enabling scalable concurrency.Predictable Performance: Rust’s zero-cost abstractions deliver C-level speed with modern safety guarantees.

Memory Safety: The borrow checker enforces valid references and prevents race conditions at compile time.

Here’s how safe shared-state management looks in our production systems:

use reqwest;

use std::rc::Rc;

use regex::RegexSet;

use serde_json::json;

use log::{info, debug};

use std::collections::HashSet;

use authmiddleware::{AuthInfo, HttpClient};

use actix_web::{Error, HttpResponse, web::{ self, ReqData}};

use crate::utility::{extractors, apicalls};

use crate::utility::extractors::{AppCache};

use std::sync::{Mutex, Arc};

pub async fn check_user_permissions(cache: web::Data<Arc<Mutex<AppCache>>>,

userdata: web::Json<extractors::CheckPathPermission>,

auth_info: Option<ReqData<Rc<AuthInfo>>>,

http_client: Option<ReqData<HttpClient>>)

-> Result<HttpResponse, Error> {

let auth_info: Rc<AuthInfo> = auth_info.unwrap().into_inner();

let (user_id, app_id) = auth_info.get_data();

let http_client = http_client.unwrap().get_client();

let key = /// key

let mut cache = match cache.lock() {

Ok(guard) => guard,

Err(poisoned) => poisoned.into_inner(),

};

if let Some(permission_paths) = cache.get_url(&key) {

return check_existance(&userdata.path, &permission_paths).await

}

let group_perms = get_user_group(&http_client, user_id, userdata.inst_id).await.unwrap();

let urls: HashSet<String> = get_group_permissions(&http_client, group_perms.group_ids, group_perms.permisison_ids).await.unwrap();

let permission_paths: RegexSet = RegexSet::new(urls).unwrap();

cache.set_url(key, permission_paths.clone());

check_existance(&userdata.path, &permission_paths).await

}

This implementation showcases Rust’s ability to handle asynchronous execution

and shared state synchronization safely. Using Arc<Mutex> allows

multiple concurrent tasks to access the cache without risking data corruption or race conditions —

proving why Rust is the language of choice for building secure, high-performance backend systems.

2. Rust: The Efficiency Multiplier

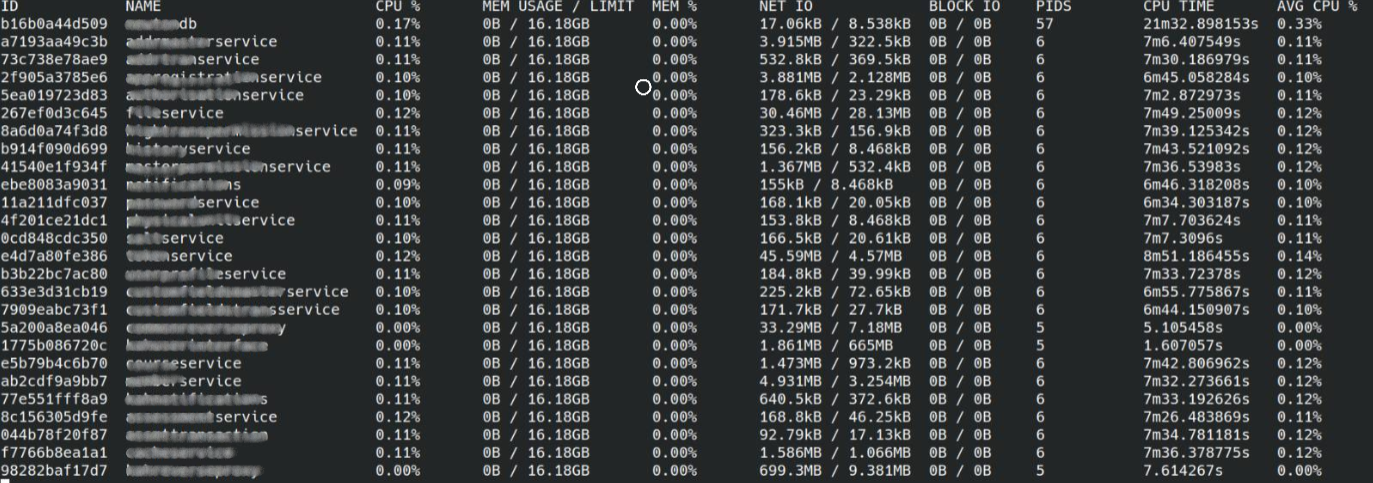

Our 80 microservices written in Rust consume just 10MB of RAM each, compared to 3GB+ for equivalent Python services. This efficiency allows us to run production workloads on a single 2-core server—hardware that would gather dust in most data centers.

3. Immutable Operating Systems

With tools like Micro SUSE, OS maintenance is now atomic. Updates apply in seconds, rollbacks are trivial, and crashes are extinct. Our servers haven't needed a reboot in 18 months.

4. Containerization Without Complexity

Using Podman/Docker, even junior developers deploy and manage infrastructure. No Kubernetes overengineering—just simple, reproducible containers.

Rust-Powered Innovation

At Zeravshan Technologies LLP, we harness the power of Rust to craft scalable, high-performance solutions that redefine efficiency and innovation. 🌍

Backend

Lightning-fast APIs with Actix Web.

Frontend

Modern, dynamic UIs using Yew + WebAssembly.

Database

Type-safe queries powered by Diesel ORM.

Deployment

Portable, scalable solutions with Docker.

Architecture

13+ microservices seamlessly integrated in production.

✔️ Runs efficiently on cost-effective servers, delivering high throughput and minimal latency while showcasing Rust’s unparalleled strength in resource-optimized environments.

The Serverless Deception

Serverless computing markets itself as cost-effective simplicity. In reality:

-

Hidden Costs: Execution fees balloon as traffic grows, often exceeding dedicated server costs.

Unmaintainable Spaghetti: Even seasoned developers abandon serverless projects due to debugging nightmares.

Vendor Lock-In: Your code becomes inseparable from AWS Lambda/Azure Functions.

One Fortune 500 client spent $2.1M unwinding a serverless migration—a cautionary tale.

The Bigger Picture

Beyond cost savings, on-premise infrastructure lets you:

-

Fund employee raises instead of subsidizing Bezos' space ventures.

Future-proof your architecture against cloud price hikes.

Reduce energy waste (our setup uses 90% less power than AWS).